Quora

Empowering users to curate their feed experiences

Desktop web • mobile web • iOS

Quora, widely recognized for answering specific questions users have, also allows users to explore their intellectual curiosities further through a personalized feed. Quora infers various topics and interests generate a feed, supplemented with followed content (users, topics, or communities). A successful feed experience, captivating users for an indefinite duration, relies on 1) Quora’s machine learning (ML) models to deliver relevant content and 2) interfaces that allow users to curate content through providing feedback for the ML models to use. Combining these elements leads to serendipitous discoveries that align with users' personal interests, maximizing engagement and retention, and driving revenue growth.

My role

Design lead, experimentation, A/B testing, AI/ML, strategy, systems design, data analysis

Team

1 product manager, 3-4 engineers, 1-2 data scientists, 1 user researcher

Timeline

Mar 2021 – Mar 2023

Overview

Problem

Quora's revenue relies heavily on feed engagement, which requires delivering highly relevant, personalized content to each user. To achieve this, ML algorithms need explicit and implicit feedback from users to understand their preferences and tailor the feed accordingly.

Goal

Increase feed engagement and revenue by empowering users to personalize their feeds through maximizing feedback collection by running iterative weekly experiments.

Context

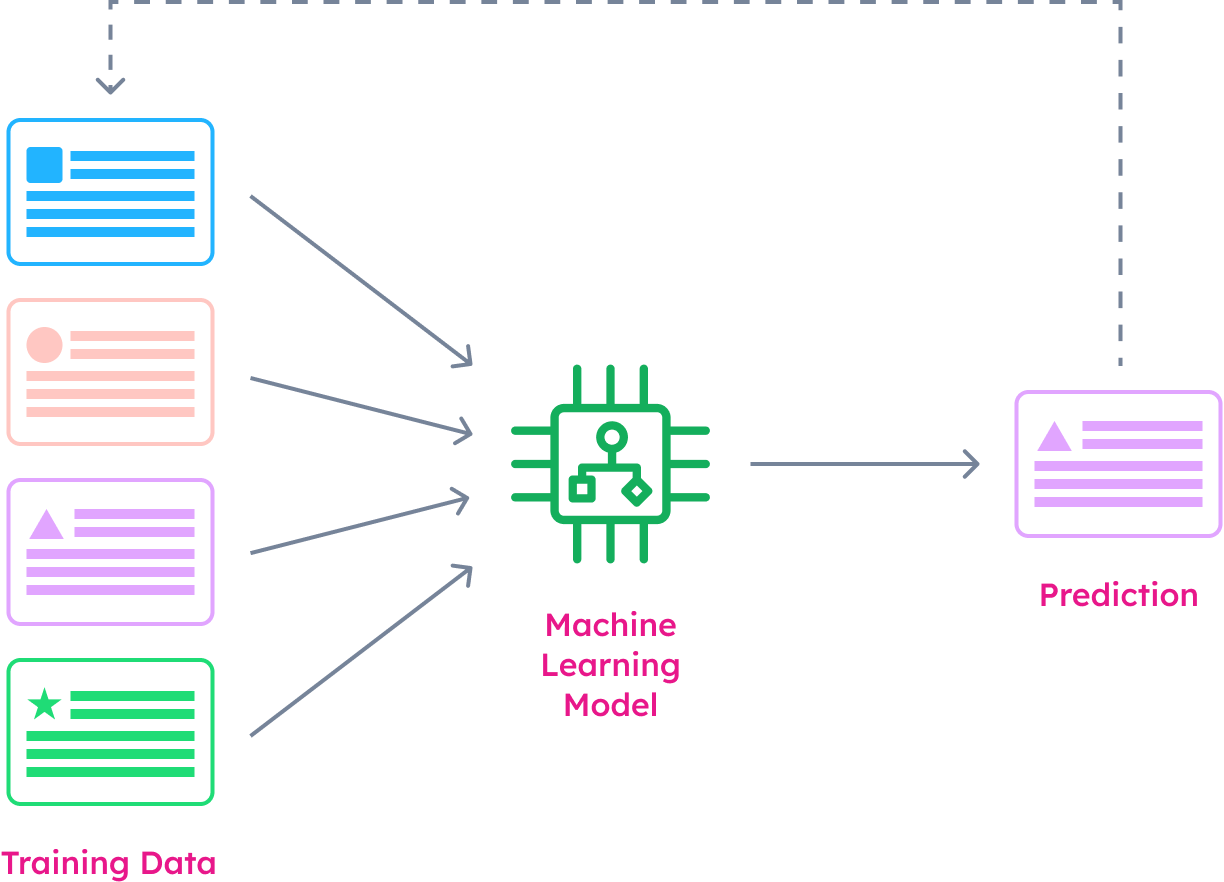

Machine learning predictions

Machine learning is a method to make predictions based previous data. Feed systems have endless content options, so a subset is curated for user by the algorithm, based on their likes and dislikes. This "training data" is used by an ML model to output a prediction with the best option. Feedback given on the prediction refines the training data, creating a continuous cycle to pick the next prediction, to generate an infinite, personalized feed.

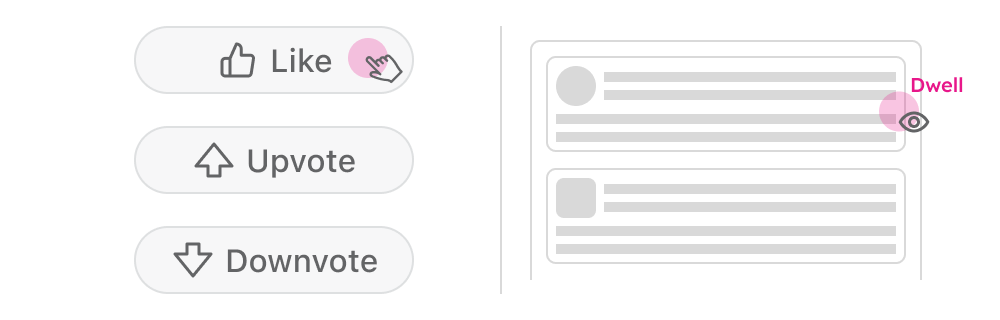

Design captures feedback signals for ML

For each prediction (i.e. piece of content) shown, the ML model needs to learn the user’s reaction to it. Design enables ML to provide relevant content by building interfaces to accurately capture and interpret user preferences and intent through feedback known as signals.

EXPLICITNESS

How clearly the user's intent is captured through an action – explicitly selecting an action or implicitly dwelling

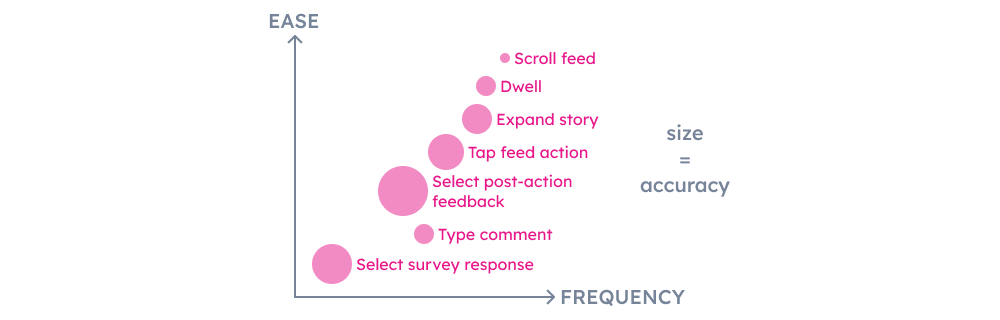

FREQUENCY

How often a user repeats an action that is providing a signal – lighter weight actions are more frequently done

ATTRIBUTABILITY

How clearly and accurately an action can be attributed to a signal – more explicit and clear = more accurate prediction

CONSISTENCY

The ability to measure the same signal over and over again – varying sizes add bias and noise to the signal’s clarityCategorizing user feedback actions

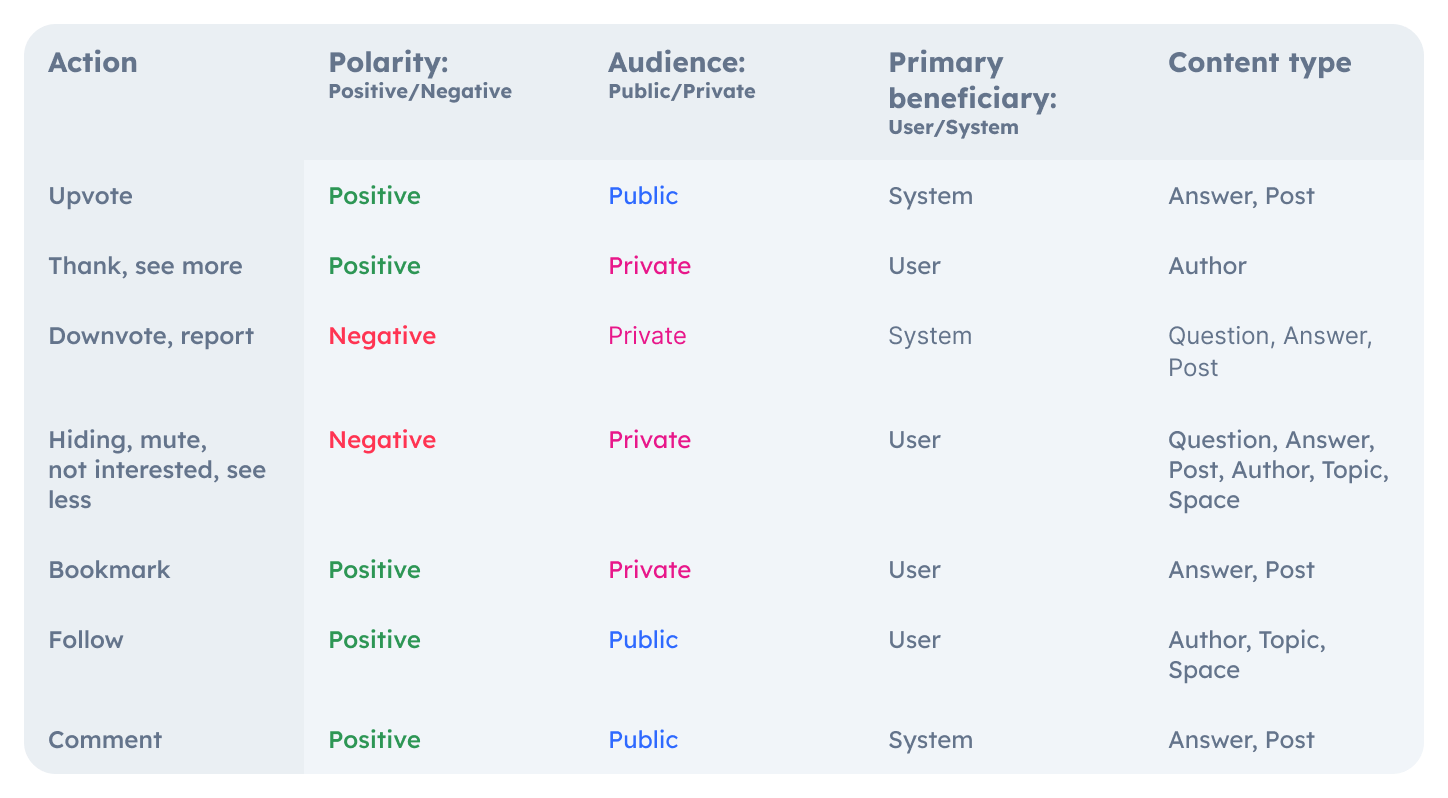

Each user action provides different feedback signals. These actions are categorized to align the UI with the interpretation of the ML models, determining whether feedback is positive or negative, public or private, and how it affects the user or system and different feed content types it applies to.

Feedback taxonomy chart

Feedback taxonomy chart

Solutions

Feed design focused on optimizing for a system, using user research to guide what levers to pull. Feed metrics are highly sensitive, so multiple experiments were conducted to A/B test incremental changes. Here are the final versions shipped as a result.

Increase positive feedback by simplifying the action bar

Engaging with the action bar is the easiest and most effective way for users to give explicit feedback on stories. While the action bar supports both positive and negative actions, the primary goal is to capture positive sentiment through upvoting content and the secondary goal is to capture negative sentiment, through downvoting.

User research revealed that users found the action bar cluttered and confusing and lacked an understanding of what some the actions represented. By simplifying the action bar and clarifying its actions, users are more likely to participate in giving feedback and upvoting content. Giving feedback allows users to tailor their feed to display the most relevant and interesting content. Data shows that users who actively give feedback, rather than passively scrolling, are also more likely to have longer feed sessions.

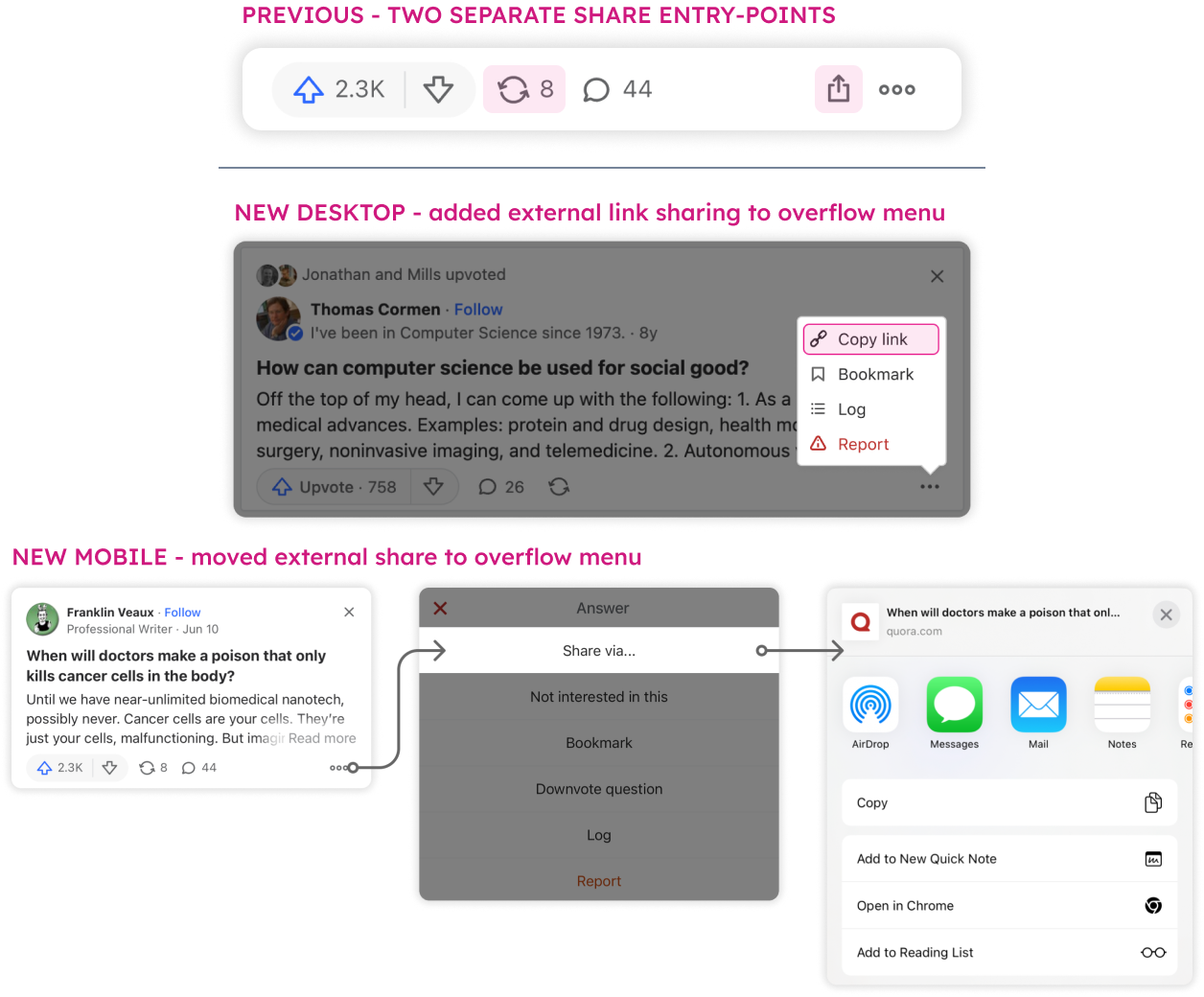

SIMPLIFICATION BY CONSOLIDATING INTERNAL AND EXTERNAL SHARE ENTRY-POINTS

After an initial dip, external shares recovered with a +0.3% increase after four weeks, validating the hypothesis that a simplified action bar would increase usage with a +0.2% increase in upvotes.

CLARIFYING ACTIONS THROUGH LABELS TO INCREASE UPVOTES

Experimented with a variety of different UIs – adding labels, clarifying meta-data information, icons, and button stylings. +2.1% increase in upvote participation, with an overall +2.4% increase in upvotes. +4.2% increase in downvote participation, resulting in an overall +5.4% increase in downvotes, and a -3% decrease in hides.Interpret negative feedback by improving follow-up response mechanisms

Feedback response menus are crucial for understanding why users take specific actions, allowing the ML model to better categorize feedback and make more accurate content predictions, especially for hiding or downvoting content. For instance, does hiding content mean the user dislikes the content, the topic, or the author? Does downvoting indicate they want to read a better answer or dislike the question?

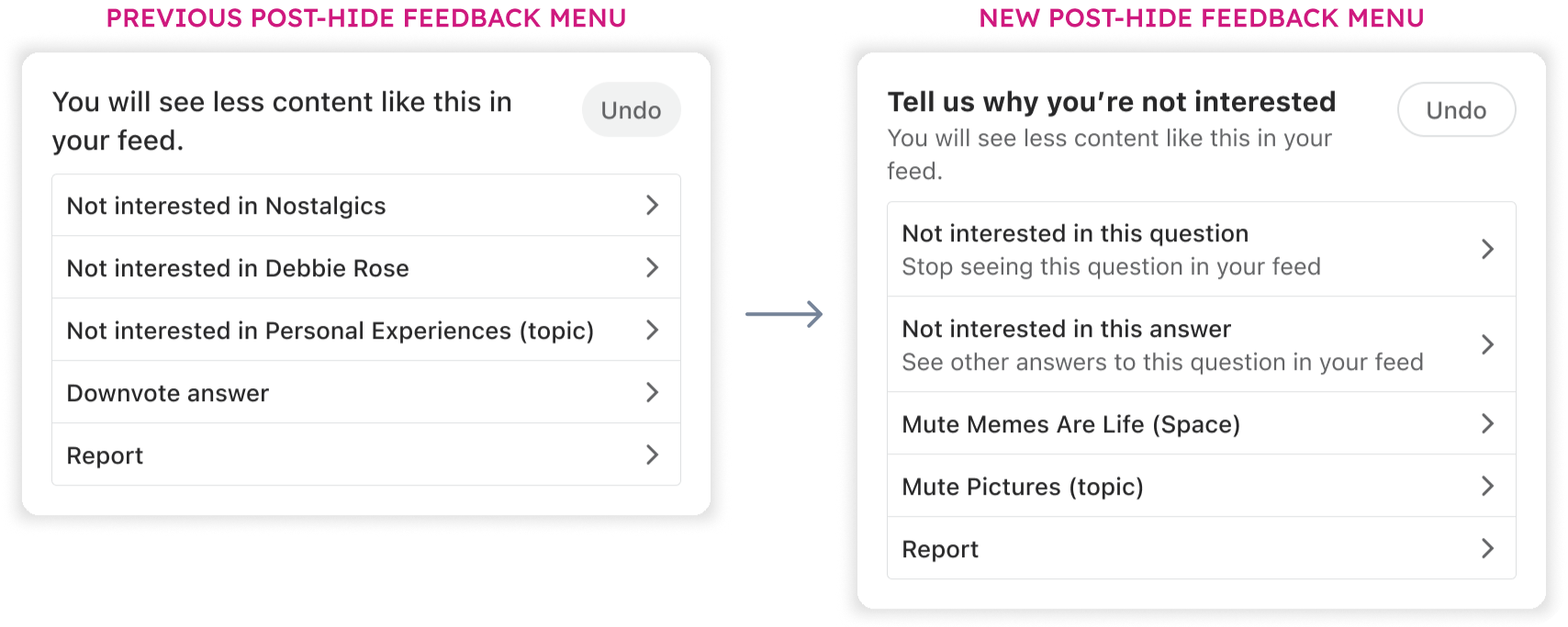

The previous post-hide feedback menu was unengaging, with redundant options, dead-ends, and unclear language. The most impactful change was adding an option for users to specify whether they were uninterested in the question or the answer, helping the ML model understand why the content was hidden. Previously, after downvoting an answer, users were shown only a confirmation message, leaving them in a dead-end state without additional follow-up options or the ability find a more satisfying answer.

POST-HIDE FEEDBACK RESPONSE MENU

Added an option to specify whether the question or the answer was irrelevant, along with consolidating redundant actions, ordering the options based on importance, and simplified language with explanations. +23% increase in post-hide responses and a +0.02% increase in feed engagement.

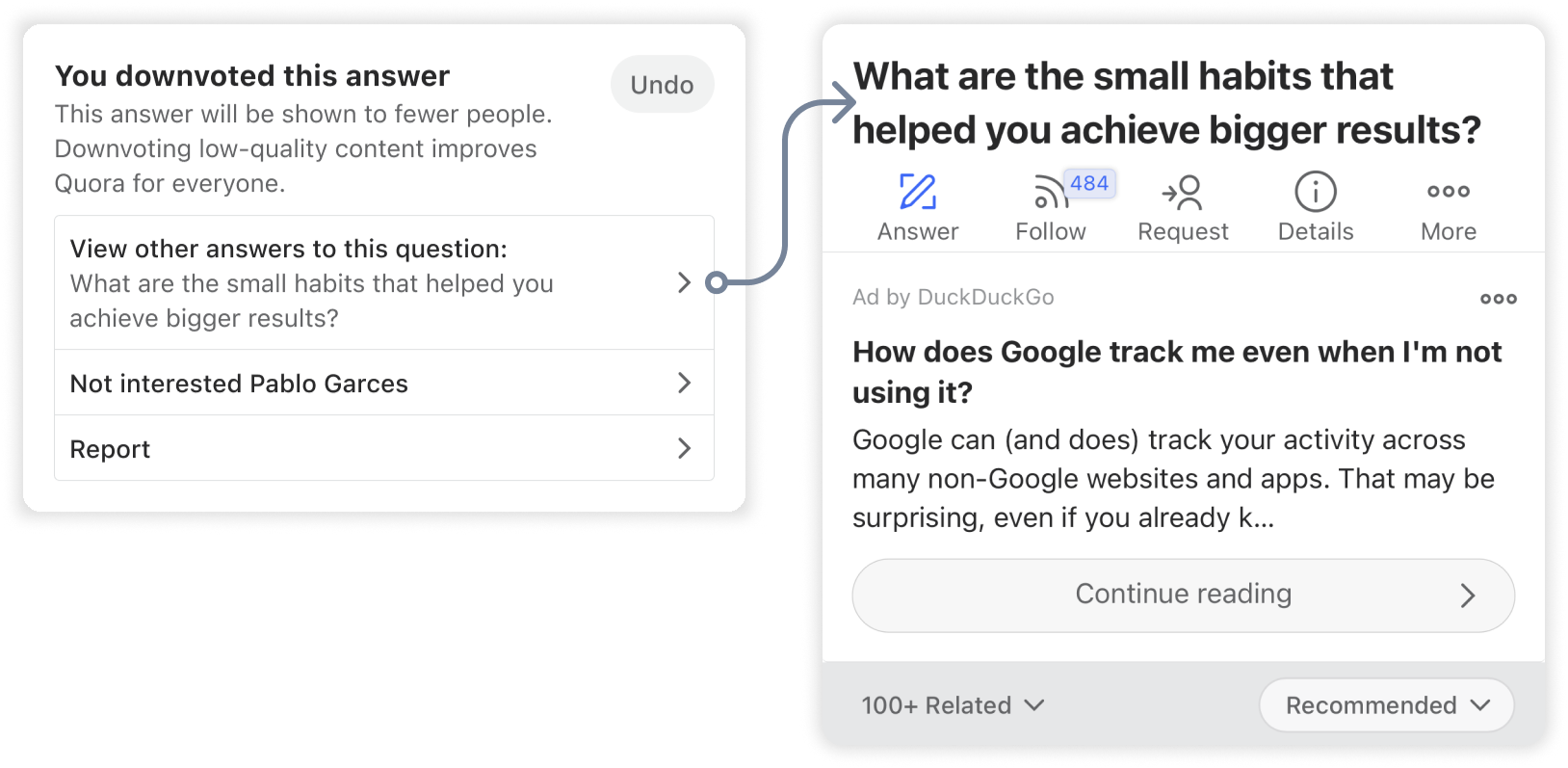

POST-DOWNVOTE ANSWER FLOW

Repurposed the feedback response menu to provide additional follow-up options (based on ML needs) or the ability to read a satisfying answer to the question. Major increase in question page click-throughs, but negatively impacted feed engagement metrics, as the question page was not optimized to send users back to feed (follow-up work).Gathering more explicit, frequent feedback

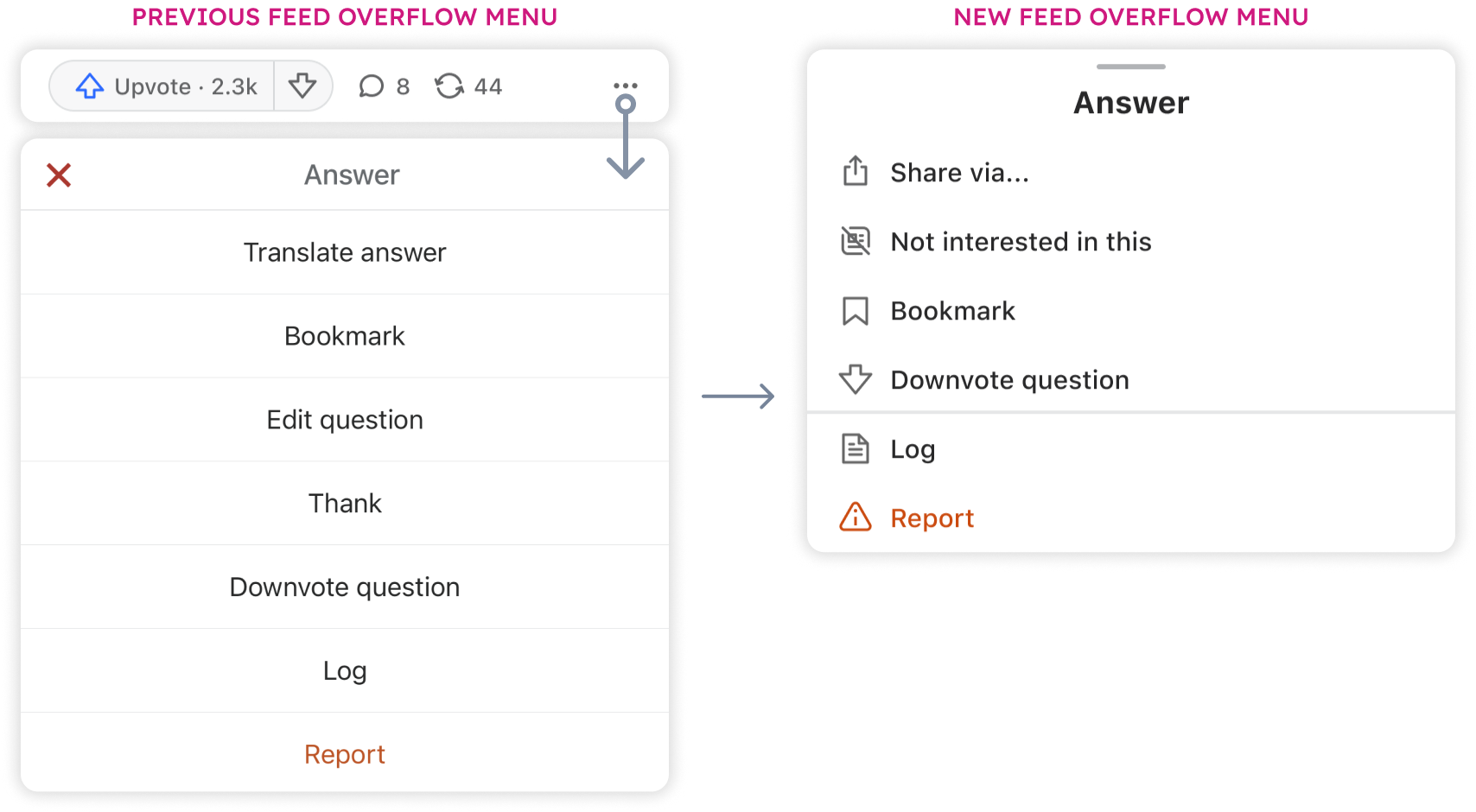

The more explicit and frequent the feedback, the more accurately and quickly ML models can adapt to provide the best content. Beyond the action bars and post-feedback response menus, there were limited avenues for additional explicit feedback. The overflow menu offered options like "Thank" and "Downvote Question," and periodic surveys appeared on feed stories, but these methods were infrequently used.

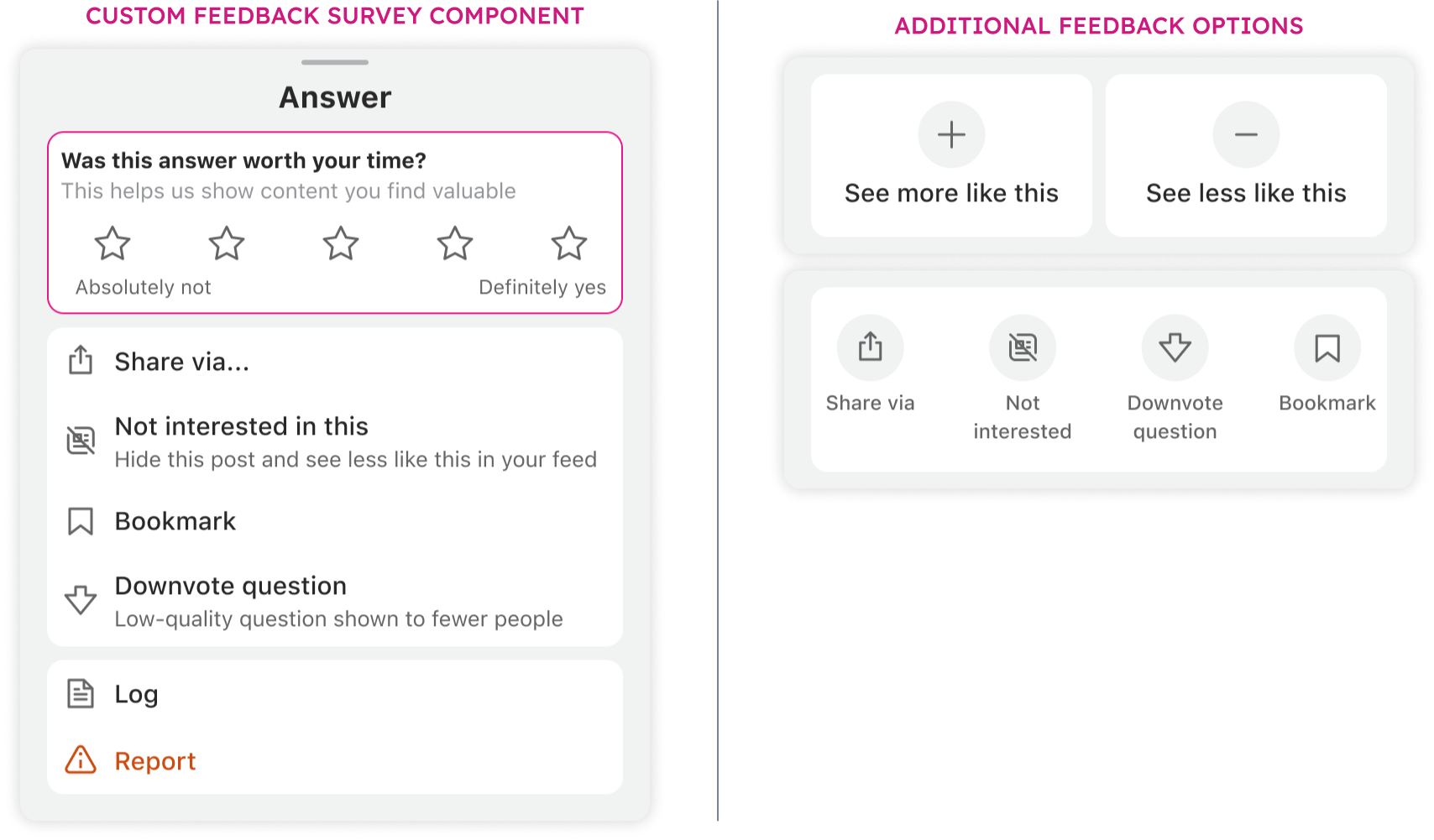

The overflow menu's low usage stemmed from its cluttered design and difficulty in parsing options. We removed low-impact options and added icons and section for quicker scannability, along with the persistent explicit ability to hide irrelevant content. Additionally, we revamped the overflow menu to include custom feedback components, such as the feed story survey, to gather explicit feedback more frequently.

IMPROVING FEED STORY OVERFLOW MENUS WITH BETTER OPTIONS AND SCANNABILITY

+6% increase in hide story engagement, +8% increase in users selecting an overflow menu option, -5% decrease in dismissing the menu.

ADDING ADDITIONAL FEEDBACK ENTRY-POINTS IN OVERFLOW MENUS

Feedback components would be tailored to the type of signal the ML model needed. For instance, a rating scale would assess content value, while a binary option indicated towards seeing more or less of similar content. Initial experiments demonstrated significant increases in signal collection, positively impacting feed engagement.Outcomes

Impact

Through a number of experiments and projects, the feed design team contributed significantly towards overall feed metric goals:

~10% increase year over year, scaling from ~300 to ~400 million monthly active users over three years, contributing significantly towards a ~$1M increase in revenue per year